Background: what is NVMeOF and how it differs from NVMe?

Non-Volatile Memory Express (NVM Express or also more commonly NVMe) and NVMe over Fabrics (NVMe-oF, NVMeOF) are becoming a hype. The “NVMe” term still confuses many people. Many people use it to denote NVMe (PCIe) SSDs and some to denote the NVMe protocol.

NVMe SSDs are the new default in business laptops and will eventually supersede SATA and SAS SSDs in the data center. Technology superseding an old one is not news by itself – however the transition is often surprisingly fast.

The storage industry is embracing NVMe and NVMeOF in an effort to cope with unprecedented demands for lower latency and higher application performance.

NVMe SSDs have already gained some adoption, while NVMe over Fabrics is still in its infancy.

What exactly is NVMe?

NVMe is a interface specification, like SATА, which allows fast access for direct-attached solid state devices. It is a specification optimized for NAND flash and next-generation solid-state storage technologies. Unlike SATA and SAS, NVMe devices communicate with system CPU using high-speed PCIe connections directly. A separate storage controller (HBA) is not required. NVMe devices come in a variety of form factors – Add-in cards, U.2 (aka 2.5 inch) and M.2.

NVM Express devices communicate directly with the system CPU. They can achieve 1 million IOPS, 3 microseconds latency and low CPU usage. This makes NVMe SSDs faster than SATA SSDs. Low cost NVMe SSD can be about 2 times faster than a similarly priced SATA SSD. At the high end they deliver approx 10 times more IOPS and 10 times lower latency.

These technical capabilities result in business benefits. For example, with NVMe customers receive much faster response times. Companies are able to process a higher volume of business transactions per second. Higher IOPS and lower latency deliver better end user experience and increases the competitiveness of the business.

A recent comparison made by Intel showed that compared to a SATA SSD, NVMe SSD has 4.7 times higher performance.

What exactly is NVMe over Fabrics?

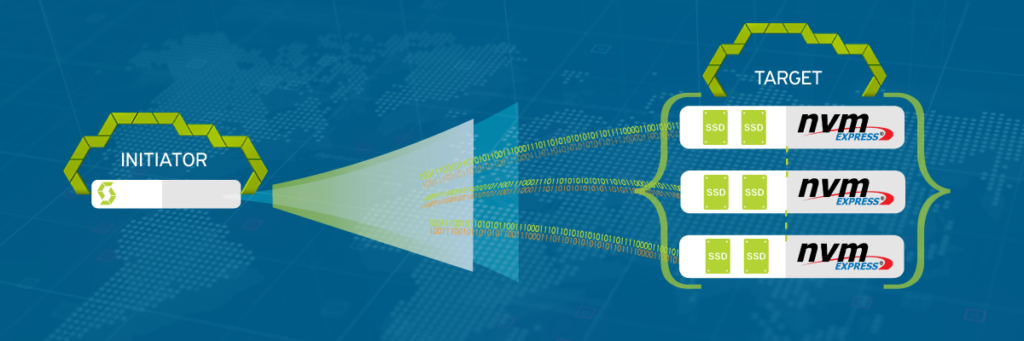

NVMeОF or NVMe over Fabrics is a network protocol, like iSCSI, used to communicate between a host and a storage system over a network (aka fabric). It depends on and requires the use of RDMA. NVMe over Fabrics can use any of the RDMA technologies, including InfiniBand, RoCE and iWARP.

NVMeOF, compared to iSCSI has much lower latency, in practice adding just a few microseconds to cross the network. This makes the difference between local storage and remote storage very small.

As any network protocol it can be used to access a simple feature-less storage box (JBOF) as well as a feature-rich block storage system (SAN). It can be used to access a storage system built with previous generation (SATA) devices. However it is strongly associated with NVMe devices due to the performance benefits of the combination.

The NVMe over Fabrics (NVMeOF) is an emerging technology. It gives data centers unprecedented access to NVMe SSD storage.

To summarize – NVMeOF enables faster access between hosts and storage systems. And this drives new levels of business agility and competitiveness.

NVMeOF: state-of-the-art or not?

While NVMeOF is getting popularity, many vendors are jumping on the band-wagon to deliver very fast, low latency storage systems. Often these systems are marketed as NVMeOF. In fact use a proprietary native protocol for the specific solution. There’s nothing wrong with this approach, and it might even be superior in many ways, it’s just not NVMeOF.

NVMeOF itself has some drawbacks which might hinder its adoption:

Firstly, NVMeOF is a standard still in its infancy. Each vendor has its own way of implementing this standard in their solutions. This leads to different implementations of NVMeOF, which are potentially incompatible. If you want it to work in your deployment, you’ll have to put extra diligence in designing and implementing an NVMeOF solution, get a reference architecture and follow it by the word.

Secondly, NVMeOF is in essence a glorified iSCSI, except it is faster. NVMeOF copies the outdated architecture and concepts of a the traditional SAN model, which was developed some 40 years ago. It does not play well with current technologies and concepts such as fully automated API control, software defined storage (SDS), hyper-converged infrastructure and distributed storage (DS).

This means NVMeOF is a point-to-point link between one initiator host and one target. This architecture conflicts with implementing high availability and scale-out in the storage system. Not that it is impossible, but NVMeOF with HA and scale-out is inferior in many ways to a solution engineered for these requirements from the ground up. Since NVMeOF assumes connection to a single target, it needs to make extra hops over the network when deployed with a modern software-defined or distributed storage solution. This increases latency and reduces IOPS capability.

Thirdly, most implementations of NVMeOF lack support for end-to-end data integrity. End-to-end data integrity checking is critical. Especially when considering the vast amounts of data which these storage systems are expected to process at blazing speeds. Without it data corruption is very likely to happen. Not that it is impossible to implement in NVMeOF, but the practice is that it isn’t, at least yet.

Overall, there’s the tendency to use NVMeOF to gain performance at the expense of everything else that is expected of a storage system. Including compromises in system availability, data durability and storage system capabilities.

Summary

NVMe and NVMeOF are promising an order of magnitude speed improvement to storage systems. And while these are early days for these technologies, there is a strong driver for their adoption in the datacenter. Some vendors are already taking the lead on systems, which are running in production. Still potential users of these technologies have to look between the lines in order to get these 10 time improvements.

Interested to Build Scalable and High-Performance NVMe Storage?

StorPool can provide an outstanding NVMe storage for your cloud. Fill in the form to get in touch with us.

[contact-form-7 id=”12805″ title=”NVMeOF Storage”]—

Post update: 2018-07

Performance test of an NVMe-based hared storage system, showing the amazing speed of NVMe-powered shared storage systems, build by using software-defined distributed storage: http://storpool.slm.dev/blog/storpool-storage-performance-test-3-nvme-storage-servers-0-06ms-latency

If you have any questions feel free to contact us at info@storpool.slm.dev