One of the main capabilities, for which StorPool is recognized globally is the ability to deliver unmatched storage performance. Even at a small scale it delivers astonishing performance, while scaling linearly as the storage system grows. The blazing fast storage speed, at affordable price makes StorPool a preferred choice for companies, aiming to achieve great performance and high availability at an affordable price point. Even a small StorPool setup can replace a multi-million dollar all-flash storage array.

To demonstrate what StorPool is capable of we post the performance metrics of our latest small, NVMe-powered demo lab. We measure performance in IOPS and latency, and also show the correlation between these two metrics, as visible from an individual initiator.

Low latency is one of the most important metrics for a storage system (and not IOPS alone). This is so, because real production workloads are typically with low queue depth, i.e. latency sensitive, while also experience occasional bursts (lots of operations submitted at the same time). Therefore, for the real workload, both latency under light load and burst handling capability are important.

The storage system under test consists of 3 storage nodes, which are with NVMe SSDs.

Specs of the tested storage system:

3 storage servers (nodes), each with:

– 1RU chassis with 4x hot-swap NVMe drive bays

– CPU: 1x Intel Xeon W-2123 4-core CPU

– Memory: 16 GB RAM

– Boot drive: Intel S3520 150GB

– NIC: Mellanox ConnectX-5 dual-port 100G QSFP28, PCIe x16 – used as dual-port 40G

– Pool drives: 4x Intel P4500 1TB (SSDPE2KX010T7)

– StorPool version: 18.01, with RDMA support and NVMeOF-like networking capabilities

Network/Switches: Dual Mellanox SX1012 with QSFP+ DAC cables

Total capacity: 12 TB raw = 3.6 TB usable (with 3-way replication, and 10% for CoW (Copy on Write) on-disk format and safety checksums).

Note: the hardware used here is optimized for a small lab setup. Production systems usually have more and much bigger NVMe drives, in order to improve density and optimize $/IOPS and $/GB.

To run the storage performance test, we created 3x StorPool volumes (LUNs) as follows:

– volume size 100 GB. Total active set for tests 300GB

– total cache in StorPool servers across 3 servers 6.3 GB (2% of active set), to minimize cache effects

– each volume with 3 copies on drives in 3 different storage servers

– each volume is attached to a different initiator host

Then we simulated 3x FIO workloads:

– FIO workloads, initiators and storage servers running on the same physical servers (hyper-converged deployment)

– FIO parameters: ioengine=aio, direct=1, randrepeat=0

– rw parameter = read, write, randread, randwrite, randrw – depending on the test

– Block size = 4k, 1M – depending on the test

– Queue depth = 1, 32, 128 – depending on the test

Some tests are limited by the number of initiators and CPU resources allocated for workload, initiators and storage software, as the storage sub-system was not designed for running applications.

The same system would be able to deliver more throughput to a larger number of external compute servers/workloads.

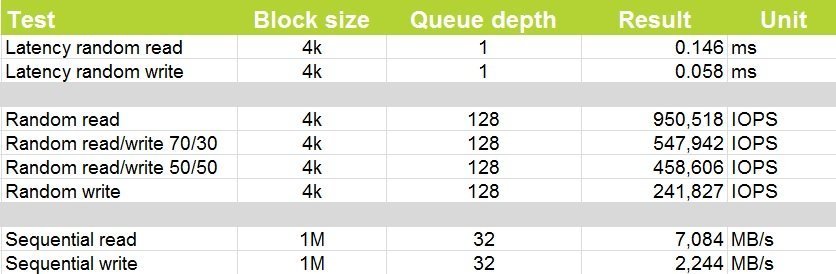

The results: performance of the system, aggregated (all-initiators):

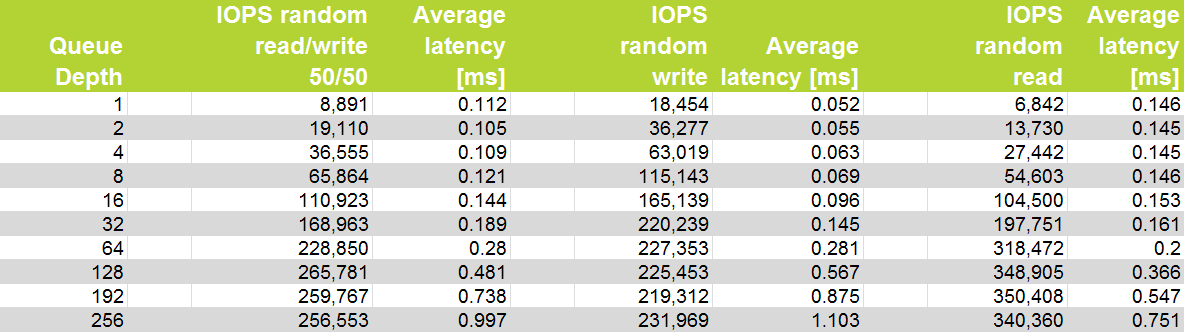

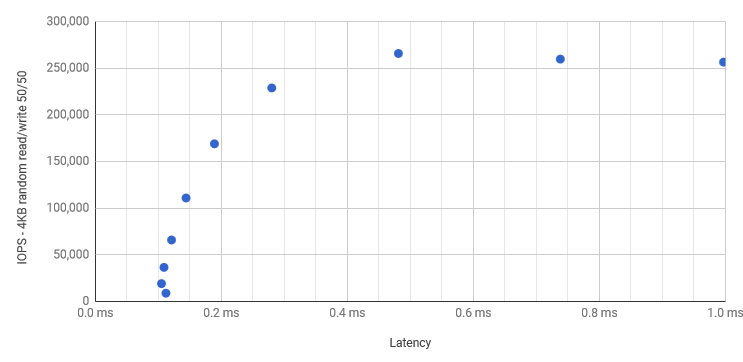

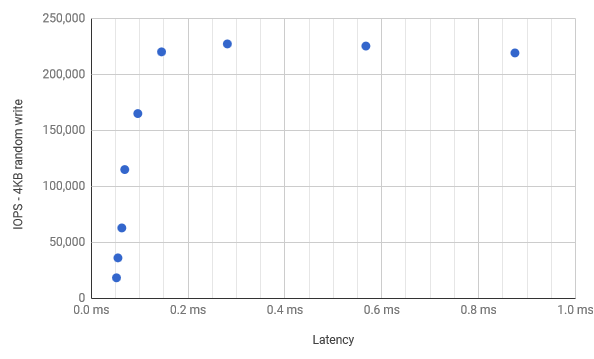

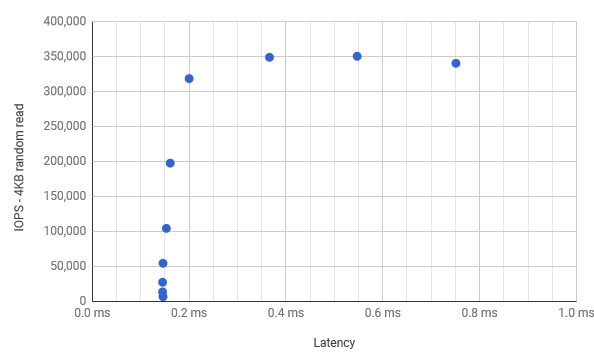

The correlation between IOPS and storage performance latency of the storage system under different workloads, single initiator:

Test designed to show relation between storage performance latency and IOPS of the storage system, as visible from an individual initiator.

IOPS vs. Latency: 4KB random read/write 50/50

IOPS vs. Latency: 4KB random write

IOPS vs. Latency: 4KB random read

The presented storage system is recommended for public and private cloud builders, who are searching robust shared storage system, with the best price/performance in terms of latency and IOPS.

If you have any questions feel free to contact us at info@storpool.slm.dev