Why storage pricing does not mean anything anymore

In all storage projects, inevitably the question of price is raised, but currently, the enterprise storage cost per TB, per GB is one of the most misunderstood and confusing areas within the product selection process. Not so long ago, comparing storage systems was relatively straightforward, all systems were priced on $/GB basis and were more or less similar in specification, i.e. HDDs, CPUs, controllers, and capacity Of course offers were “normalized” to adjust for differences in performance, capacity and feature set.

However, in the software-defined era, things are not so simple anymore. The outdated technologies are still here, plus a large variety of “new age” technologies as well – traditional SANs, cached SANs, hybrid arrays, all-flash arrays, different flavors of storage software, software-defined storage, distributed storage, to name just a few. Therefore it has become increasingly more difficult to understand, let alone compare different storage alternatives.

The task is becoming even harder since the marketing departments of many vendors offer “marketing-defined storage”. They package and position hypothetical concepts and great sounding solutions, which in fact have zero practical applications. In some cases they intentionally, or by mistake, mislead the customer, or misrepresent the capabilities and the price, (or both), of the product itself.

This series of articles aims to shed some light on the matter of storage pricing for people who are searching for a new storage system, but who are not experts on the matter themselves.

How many TBs is 1 TB (or how many minutes is one hour)?!

GB – the good old metric of storage systems. $5/GB does not mean a thing now. And just before we start – let us scale up and use TB, since volumes have long ago outgrown the good old GB metric. So $5,000/TB.

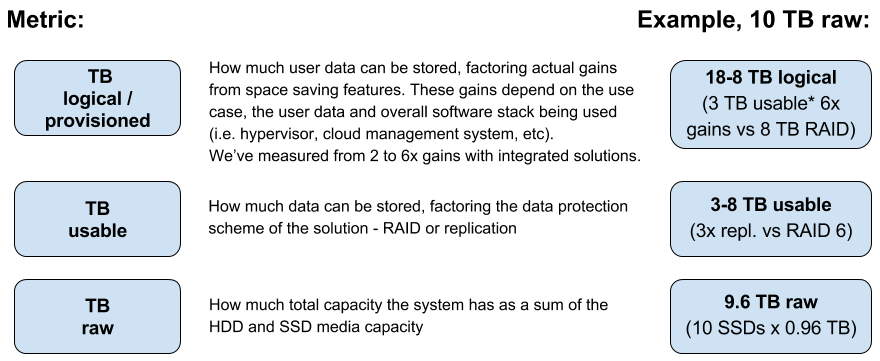

As it turns out one hour might not be exactly 60 minutes anymore, in storage terms. “I need 10 TB.” 10 TB of what? Does it mean TB raw or is it TB usable? Is it TB usable or TB logical? Is it TB “usable” usable, or does it include some unknown factor of potential savings from data reduction features, built into the TB “usable” already? We will get to the last later on, let us cover the first questions first. Hers is a diagram explaining which is which:

TB or TiB

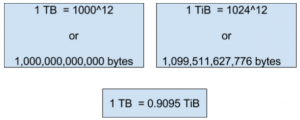

While we are discussing this, let us point out something else – the difference between terabytes (TB) and “binary terabytes” (TiB). Every hard disk or SSD that you buy is in TB. Say 1 TB HDD is 1000^4 bytes or 1,000,000,000,000 bytes. However, an OS (operating systems) and file systems all report space in TiB. For them, 1TB is equal to 1024^4 bytes. In other words, this means that approximately 10% of your raw capacity “disappears” when you start storing data on it.

So if your raw capacity is 10TB you can only store about 9TB of data (minus some system overhead). This works in the other direction as well, if you are a public cloud, you usually bill customers by capacity visible in the OS/FS/VM, which is approximately 10% more than you actually have on the underlying drives, (for simplicity and to “normalize” we will not look into data protection or space-saving technologies here).

Expect new pricing concept for enterprise storage cost per TB, per GB

We just went through the switch from the on-premise model “I buy my servers, storage, and network and they cost $X” to the cloud model, where these resources are billed per hour or per month. The same thing is happening with the switch to software-defined storage. Even when you buy your systems to manage yourself and co-locate them (you never actually touch them), your underlying model changes. Hardware is in many cases leased and the software supplied via a subscription, which follows your actual capacity needs and income from this infrastructure. In other words IT is moving from a CapEx model to an Opex model, (in fact, the entire global economy is moving in this direction, should you have overlooked this trend).

What are the data reduction/capacity saving features? What is the use case?

Storage was already becoming complex, with more and more functionality, firstly RAID, then compression and deduplication (dedup), etc. However, with the incredible amount of intelligence built into software, it is now impossible to know exactly how much space 1TB of data will actually take upon the underlying storage system. Thin provisioning, TRIM/DISCARD, snapshots, and clones, zeroes detection, compression, dedup, erasure coding – there is a long list of features that deliver different levels of space savings and affect the actual capacity of a storage system. However, these savings vary, depending on the actual use case of the customer and their data.

So even two companies who are exactly the same: say two IaaS providers providing 50TB of logical storage to their customers, will, in fact, store different amounts of usable data on their storage systems, if one of the company’s customers are enterprise customers and the other’s are SMB customers. This is because the use cases and the data of their respective customers will be different and will be affected to a different extent, by a given set of data reduction/space saving features.

“Dedup” does not cure everything

It should be noted that different features are suited to different use cases and they always have pros and cons, i.e. you always lose one thing in order to gain something else. For example, many of the “all-flash” vendors promote deduplication and compression, as the answer to all storage problems, or at least a justification for their high prices. Say a system from such vendors cost $12/GB usable ($12,000 /TB, ridiculous, eh?) and then because they assume you will get say 5 times savings from their magical features, you will end up at an acceptable price of $2,400/TB usable, however, there are two problems with this theory:

- It assumes that the maximum savings the technology can deliver if your use case is a perfect fit for it is achieved. In reality, it is very hard to achieve these levels of “data shrink”.

- The second point is that your use case might not be a good fit for these features at all – maybe they will work well in a given environment where you have thousands of employees saving millions of Word and Excel files, which can be deduped and (somewhat) compressed. But if your use case is running different purpose VMs or video and photo files, then these features will not work nearly so well, as in these scenarios, there is not so much that can be deduped and audio/photo/video is already compressed, so you will only end up with marginal savings (and effective cost close to the original $12,000 / TB).

TO BE CONTINUED …

Later on, we’ll get back to storage features and their impact on system performance.

This is a series of articles on storage pricing, you can find Part 2 and Part 3 below. Meanwhile feel free to share your views in the comments, forward the article to a friend or get in touch with us at info@storpool.slm.dev

Go to “A practical guide on storage pricing in the 21-st century: PART 2”

Go to “A practical guide on storage pricing in the 21-st century: PART 3”

Share this Post