This is a test of a StorPool Distributed Storage system, which consists of 20 SSDs, spread across 5 storage servers (nodes). The system uses 2 copies of each piece of data in a different server to guarantee redundancy (i.e. 2x replication).

Parameters of the system under test:

5 storage nodes, each with:

– Supermicro X10SRi-F server

– CPU: Intel Xeon E5-1620v4

– RAM: 4 x 8 GB DDR4 RDIMM @2133 MT/s

– NIC: 2-port Intel X520-2 (82599ES) 10GbE NIC

– HBA: Intel C610 AHCI

– 4x Samsung PM863a 1.92 TB

– Linux kernel 2.6.32-642.11.1.el6.x86_64

– StorPool software version: 16.01.779

Total storage system resources:

– 5 storage nodes

– 10 x 10GE ports

– 20 x PM863a 1.92 TB SSDs – 38 TB raw space, or 17.45 TB usable space (before gains from space saving features)

Network:

– 2x 10GbE SFP+ switches

– Direct-attach SFP+ cables

Initiators:

– This test is performed with the workload and initiator software running on the same servers as the storage system. I.e. this configuration mimics a hyper-converged configuration

– There is no specific data affinity configured in this system. 1/5 of all IO happens to be on the same node as the initiator.

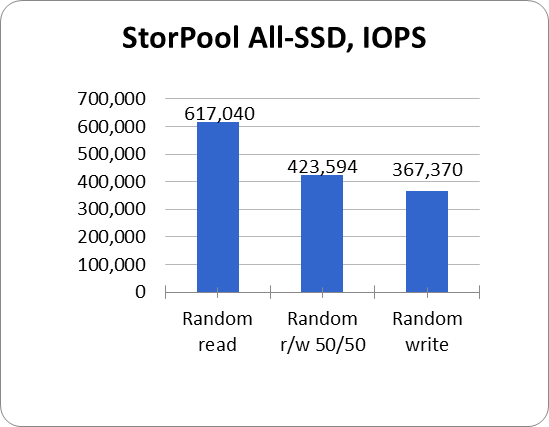

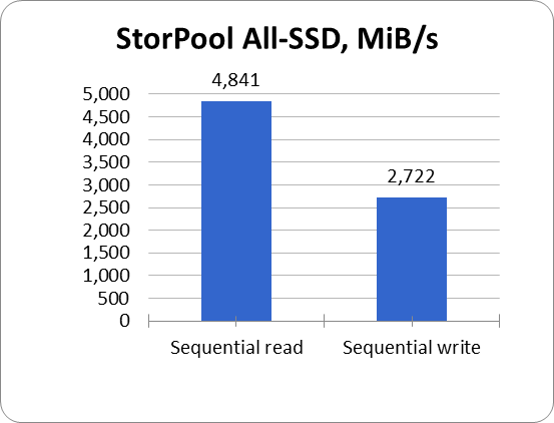

Measured total system throughput:

– Test was performed from all 5 server (client) hosts in parallel to show total system throughput

– Test performed on 5x 100 GiB fully allocated volumes

– Volumes were configured to store 2 copies on SSDs, taking a total of 1.1TB raw space (including copies and data protection). Тhis allocation policy provides maximum 16,256 GiB usable space from the available SSDs

– Test was conducted with FIO 2.0.13, AIO, Direct on Linux block device

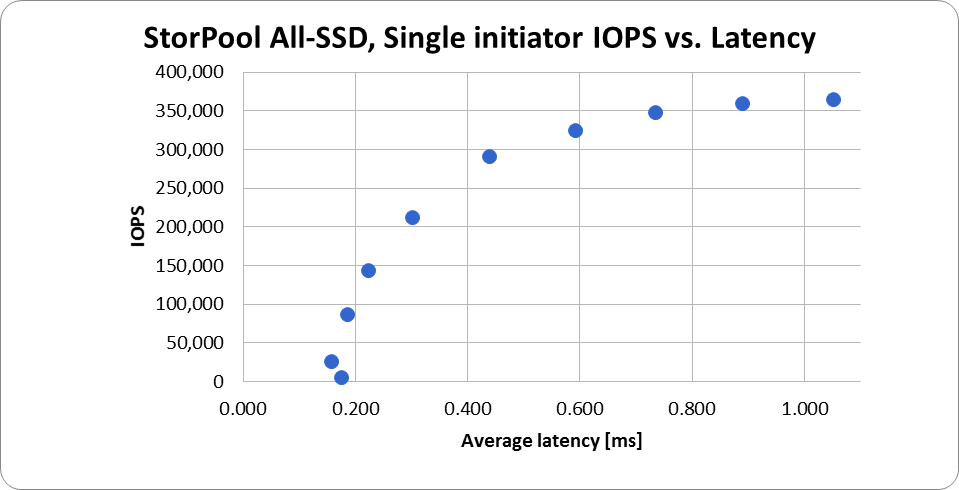

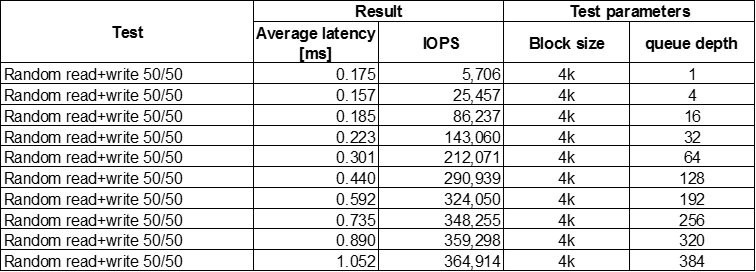

Measured storage latency under different load levels:

– Test was performed from one initiator with different load levels, to show latency under load

– Queue depth starts from 1 and increased until latency goes above 1 ms

– At the high end of the queue depth scale, this test is limited by capability of the initiator, not by the whole storage system

Note: When used from multiple initiators, this storage system can deliver even more random read+write 4k IOPS with sub-1 ms latency

Note: When used from multiple initiators, this storage system can deliver even more random read+write 4k IOPS with sub-1 ms latency

The total cost of this system as tested was approx $69,600 over 36 months (prices as of Jan 2017). This makes $4/GB usable and $0.11/IOPS. The system scales seamlessly and online with small steps.

If you have any questions feel free to contact us at info@storpool.slm.dev